Ruler HA

- Author: Soon-Ping Phang

- Date: June 2022

- Status: Deprecated

Introduction

This proposal is deprecated in favor of the new proposal

This proposal consolidates multiple existing PRs from the AWS team working on this feature, as well as future work needed to complete support. The hope is that a more holistic view will make for more productive discussion and review of the individual changes, as well as provide better tracking of overall progress.

The original issue is #4435.

Problem

Rulers in Cortex currently run with a replication factor of 1, wherein each RuleGroup is assigned to exactly 1 ruler. This lack of redundancy creates the following risks:

- Rule group evaluation

- Missed evaluations due to a ruler outage, possibly caused by a deployment, noisy neighbour, hardware failure, etc.

- Missed evaluations due to a ruler brownout due to other tenant rule groups sharing the same ruler (noisy neighbour)

- API -inconsistent API results during resharding (e.g. due to a deployment) when rulers are in a transition state loading rule groups

This proposal attempts to mitigate the above risks by enabling a ruler replication factor of greater than 1, allowing multiple rulers to evaluate the same rule group — effectively, the ruler equivalent of ingester HA already supported in Cortex.

Proposal

Make ReplicationFactor configurable

ReplicationFactor in Ruler is currently hardcoded to 1. Making this a configurable parameter is the first step to enabling HA in ruler, and would also be the mechanism for the user to turn the feature on. The parameter value will be 1 by default, equating to the feature being turned off by default.

A replication factor greater than 1 will result in the same rules being evaluated by multiple ruler instances.

This redundancy will allow for missed or skipped rule evaluations from single ruler outages to be covered by other instances evaluating the same rules.

There is also the question of duplicate metrics generated by replicated evaluation. We do not expect this to be a problem, as all replicas must use the same slotted intervals when evaluating rules, which should result in the same timestamp applying to metrics generated by each replica, with the samples being deduplicated in the ingestion path.

PR #4712 [open]

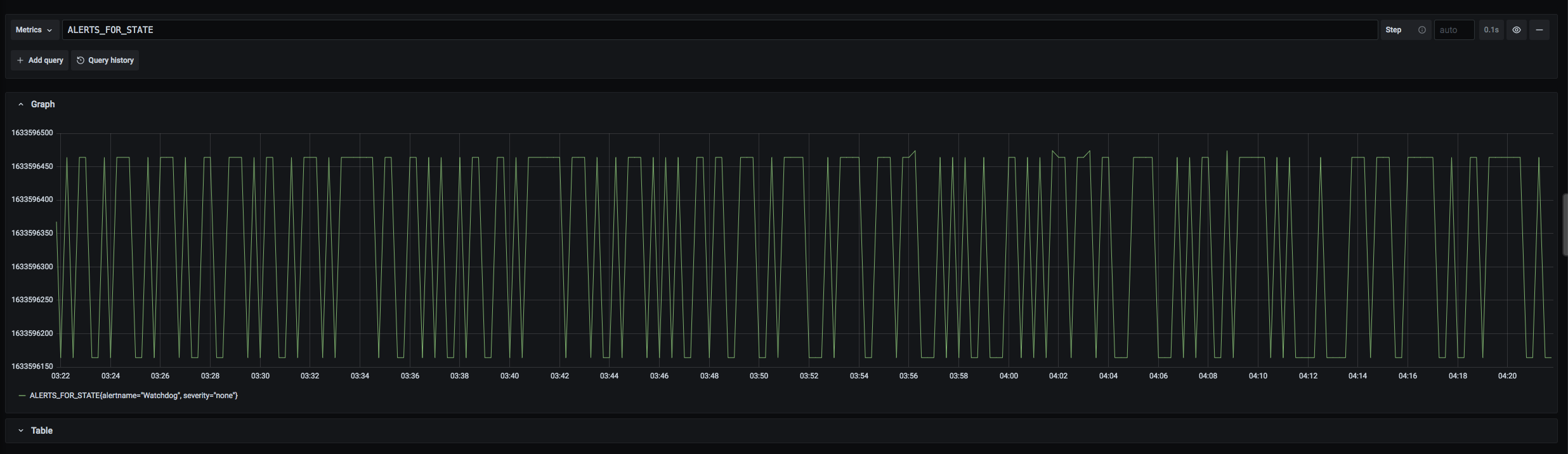

Adjustments for ALERTS_FOR_STATE

When an alert fires in a rulegroup shared across multiple rulers, the alert on each ruler will have slightly different timestamps due to the differences in evaluation time on each ruler. This results in the ALERTS_FOR_STATE metric being deduplicated with the “duplicate sample for timestamp” error. To prevent this, we synchronize Alert.activeAt for the same alerts in different rulers using a post-processing hook.

PRs:

Weak and Strong Quorum in ListRules and ListAlerts

ListRules/ListAlerts will return inconsistent responses while a new configuration propagates to multiple ruler HA instances. For most users, this is an acceptable side-effect of an eventually consistent HA architecture. However, some use-cases have stronger consistency requirements and are willing to sacrifice some availability for those use-cases. For example, an alerts client might want to verify that a change has propagated to a majority of instances before informing the user that the update succeeded. To enable this, we propose adding an optional quorum API parameter with the following behaviour:

quorum=weak (default)

- Biased towards availability, ListRules will perform a best-effort merge of the results from at least $i = tenantShardSize - replicationFactor + 1$ instances. If a rulegroup definition does not satisfy a quorum of $q = \lfloor{replicationFactor / 2}\rfloor + 1$ copies, it will choose the most recently evaluated version of that rulegroup for the final result set. An error response will be returned only if all instances return an error. Note that a side-effect of this rule is that the API will return a result even if all but one ruler instances in the tenant’s shard is unavailable.

quorum=strong

- Biased towards consistency, ListRules will query at least $i = tenantShardSize - \lfloor{replicationFactor / 2}\rfloor + 1$ instances. If any rulegroup does not satisfy a quorum of $q = \lfloor{replicationFactor / 2}\rfloor + 1$ copies, a 503 error will be returned.

PR #4768 [open]

Alternatives to a quorum API parameter

Weak or strong quorum by default

Another option for making ListRules work in HA mode is to implement one of the quorum rules (weak or strong) as the default, with no control to select between the two, outside of maybe a configuration parameter. AWS itself runs multitenant Cortex instances, and we have an internal use-case for the strong quorum implementation, but we do not want to impose the subsequent availability hit on our customers, particularly given that replication_factor is not currently a tenant-specific parameter in Cortex, for ingesters, alert manager, or ruler.

Making HA availability the default, while giving users the choice to knowingly request for more consistency at the cost of more error-handling seems like a good balance.